This page contains information on standalone SoapUI Pro that has been replaced with ReadyAPI.

To try enhanced data-driven testing functionality, feel free to download a ReadyAPI trial.

ReadyAPI adds a number of useful features to the already feature-packed open-source version. One of the most popular is the DataSource TestStep, which together with the DataSourceLoop TestStep makes the creation of complex data driven tests a breeze (almost), allowing you to use data from a number of sources (Excel, JDBC, XML, etc) to drive and validate your functional TestCases. You can even combine multiple DataSources to have them feed each other.

When running a data-driven under a LoadTest with a varying number of threads there are a number of issues to consider in regard how threads consume and share the data provided by the DataSource. Let's dig in to some of this to see how you can make it work as required.

Basically DataSources can behave in two ways during a LoadTest: they can be shared between the running threads or created independently for each thread. In the later scenario, each thread will run through the DataSource as configured in the TestCase, this is pretty straight forward.

In the LoadTest Options dialog there is an option to update the LoadTest statistics on a TestStep level , which makes monitoring of DataDriven LoadTest quite easy.

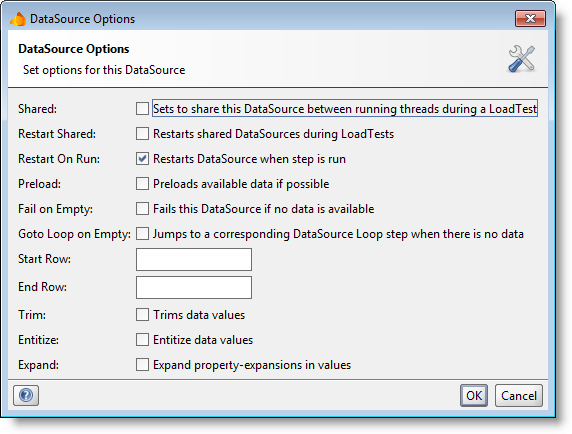

The tricky part is when you select to share the DataSource between threads during your LoadTest by selecting the Shared option in the DataSource Options dialog.

When selected, ReadyAPI will create only one instance of the DataSource and share it between all threads in the LoadTest. This can be desirable if you for example have XXX rows of data that you want to run through, but don't want to use any row more than once, but what actually happens when you run a LoadTest like this?

- The LoadTest starts and ReadyAPI initializes the DataSource to be shared between all threads

- The Threads - TestCases run and collectively consume the DataSource until it is exhausted

- Now what? Should all Threads finish or should the DataSource be reset and the Threads start over from the beginning.

In the case that the threads should finish, the limit of the TestCase must have been set to the same number of runs as number of threads. If the limit is set higher, the TestCase will be run again from the beginning (since the limit has not been reached), but now with an exhausted DataSource. Depending on if the Goto Loop on Empty or Fail on Empty options are set, the TestCase will behave accordingly, but in any case this is probably not what was intended.

Conclusion 1: If wanting to consume a Shared DataSource once in a LoadTest, set the limit to the same number of runs as configured threads

On the other hand, if you want to start over and consume the DataSource again from the beginning you need to select the Restart Shared option in the LoadTest Options dialog. Now when a new TestCase run starts, the exhausted DataSource will be reinitialized and consumed again. The limit of the LoadTest can be set both with number of runs or number of seconds, the threads will continue consuming the DataSource from the beginning until the limit has been reached.

Synchronization issues

Since we're talking threads here, strange things can happen when using the Shared and Restart Shared options as described above. Let's say you have a TestCase with a DataSource and DataSourceLoop feeding a couple of sequential requests that each take a couple of seconds to finish. Your LoadTest has 5 threads happily eating away at your DataSource when one thread gets to the point where the DataSource has been exhausted by the previous execution of the DataSourceLoop. This thread will finish and if the TestCase ends there and the limit has not been reached, the TestCase will restart thereby also restarting the shared DataSource. The other threads are still happily chewing away at their request and when they come to the DataSourceLoop, they won't "notice" that the DataSource has been restarted and will continue with this new one just as happily as before (since it is shared, right?). The result will be that if the limit of the TestCase is set by number of runs, it can take quite some time to actually finish these, since 4 threads will never finish, only 1 will be used to restart the DataSource. In the statistics table you will see some really strange numbers, a low number of total runs (since most threads never finish) but a large number of TestStep runs (since the corresponding threads are "stuck in the loop")

Maybe this sounds esoteric, but if you are running a shared and resettable DataSource in your LoadTest, the chance you will run into this is rather likely (one of our customers did..).

Each TestCase execution could actually keep track of which DataSet of the DataSource it is consuming so that when it comes to the DataSourceLoop and the data available is from the "wrong" DataSet (because it has been restarted), it will not loop back but move on and (as in the example) end the TestCase.

Conclusion 2: If you are using both the Shared and Restart Shared options, consider using the Time limit instead of Total Runs, and be prepared for some confusing numbers in the Statistics Table.