Best Practices: Working with Data

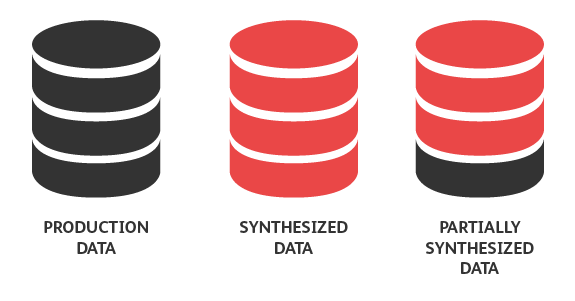

Test data creation and management is often the biggest challenge when creating tests, manual or automated. For the tester, there are two problems: where do I get it, and how do I keep track of it? There are a few best practices when working with data in testing: production data, synthesized data, and partially synthesized data.

Production Data

As the name suggests, Production Data is a copy of your production database. As a tester, production data should always be your first choice.

Production data is a true representation of what your application is going to face, warts and all, once it gets released. In terms of realism, which is what you want in data, it is unmatched. Secondly, this data will generally have much more variety than what a tester can come up with, since it was entered by (hopefully many) various users.

However, there are some significant drawbacks. The data has to be periodically extracted from production. This could have an impact on operation of production (possible downtime or slowing of response time), but it will definitely result in downtime of your test environment. Other departments, namely operations, will have to be involved to make this happen, which will increase their workload.

There are also privacy concerns as production data could potentially include personal information, such as tax identification numbers, dates of birth, credit card numbers, et c. This personal information would have to be obfuscated before importing it into the test environment, adding further workload for the operations people. Make sure you account for all this when giving time estimates.

Synthesized Data

Synthezied data is data that is created specifically for testing. There are situations when production data is not an option: the production database is too large, certain data is not available (for example edge cases, or boundary tests), or data is not yet available (for new features or new products). When synthesizing new data, you normally want to give preference to doing so through the API, rather than writing the data directly into the database. This will ensure correct key constraints are observed; if not, you just discovered a bug!

When you are developing CRUD tests this will mean using synthesized data. If you take a subset of your CRUD tests – just the create and read operations – you can use those as data-load operations, which can be used in subsequent testing. (See the Best Practices section article Structuring Your Tests.)

Partially Synthesized Data

Partially synthesized data is a blend between synthesized data and production data — it's a trade-off. This approach is used when both the size of the production database is prohibitively large and the data is also so complex that synthesizing it isn't practical either.

Imagine this scenario: You are tasked with testing multiple applications in a bank, specifically concentrating on interactions between checking, saving, mortgage, investing, payment, and other accounts. In a real bank each of these is normally handled not only by separate business units, but by completely different systems and APIs. You will hopefully be given business requirements, but will have to get clarifications from the business side.

The clarifications will probably be accompanied by real-world examples, and you can turn to the specification by example method. (See the the World Of API Testing section article Test First.)

With help from your business colleagues, you select several real-world customer accounts, that have enough real-world variety in their data to be able to cover most of your examples in the specification. You can match up selected users to story scenarios, and instead of writing your user stories as: “As a user that has a savings account with >$1million, and an investment account, and this situation, and that portfolio, I want to ...”, you can to write your user stories as “As John Dean, I want to ...”

Once business, test, and development all come to an agreement on what are the exact attributes of this fictional John Dean (due to privacy laws, you never want to use real customers), your user stories will begin to be much more concise. This is called Persona-Driven Development. You can then send a request to operations to extract all the users with particular IDs, and all of their associated accounts and transactions, obfuscate their personal information according to your security department guidelines, and import only that data into the test environment.

Tracking the Data

Regardless of where the data came from, your framework has to keep track of it somehow.

The most common method to track test data is in a separate resource file – an Excel spreadsheet, and XML formatted file, or even just a JSON key-value pair file. In this approach the external resource file contains all the test data that is present in the test environment database. The test framework reads and parses this resource file and then feeds that into the individual calls and checks the responses for expected outputs, which are also part of the resource file.

The biggest problem with this approach is that the resource file has to be synchronized with the database that the application uses. This synchronization can become difficult for complex data sets, or in the case of multiple test environments it can become a nightmare.

A better solution is not use any external resource, and just use the application database itself. The test framework, instead of reading and parsing the resource file, would just make a call to fetch back (or even synthesize) the data that a particular test needs. Since the data is retrieved on the fly, there is no need to worry about the data ever being outdated.

As you use partially synthesized data and concise user stories, your automated tests start to become equally concise. You only need to keep track of your users (either in a very small resource file, or just parametrized into a few variables right in the test source code) and then use the APIs to find anything else your test might need.

Data specification

Specification of test data is just as important as specification of test cases. Defining the source (real-world or synthetic) is sometimes dictated by the situation; in case where either source will do, real-world data should be given preference. Closely linking your test data to your test cases will make your test cases more concise, less verbose, and easier to understand by non-technical team members.